Building a RAG Chatbot with LlamaIndex

With the rise in the popularity of Large Language Models (LLMs) and generative search technologies, it is no surprise that this tech has changed the way we work. Almost every individual now has some form of AI in their workflow. Because of this rising interest, I too wanted to explore the capabilities of this technology. But not as a user. I wanted to make something with AI. So I started researching on different use cases of large language models and one that really fascinated me was Retrieval Augmented Generation aka RAG.

What is Retrieval-Augmented Generation (RAG)?

Retrieval-augmented generation (RAG) is a technique for enhancing the accuracy and reliability of generative AI models with facts fetched from external sources.

Under the hood LLMs are neural networks, typically measured by how many parameters they contain. An LLM’s parameters essentially represent the general patterns of how humans use words to form sentences. That deep understanding, sometimes called parameterized knowledge, makes LLMs useful in responding to general prompts at light speed. However, it does not serve users who want a deeper dive into a current or more specific topic. Which often results in hallucinations (LLM spitting out whatever it wants).

Here is where RAG comes in.

RAG has two phases: retrieval and content generation. In the retrieval phase, algorithms search for and retrieve snippets of information relevant to the user’s prompt or question. This assortment of external knowledge is appended to the user’s prompt and passed to the language model. In the generative phase, the LLM draws from the augmented prompt and its internal representation of its training data to synthesize an engaging answer tailored to the user in that instant. The answer can then be passed to a chatbot with links to its sources.

In short: The process of retrieving relevant documents and passing them to a language model to answer questions is known as Retrieval-Augmented Generation (RAG).

Side note: I may have copy-pasted the above text from somewhere. I just have forgotten where I got it from 😂

LlamaIndex

LlamaIndex is a data framework that enables you to build context-augmented generative AI applications. Your data could be in any form such as PDF documents, in an SQL database, behind an API, in excel documents or even audio files. LlamaIndex provides you with a library to ingest, parse and process your data so that it can be consumed by a LLM to execute complex query workflows. It provides you tools like

- data connectors to ingest data from various sources,

- data indexes that structure and transform your data into a representations that LLMs could understand,

- engines such as query engines for question-answering workflows, and chat engines for conversational flows.

- agents that are LLM powered tools

- and integrations for observability and evaluation of your framework!

More info: https://docs.llamaindex.ai/en/stable/#introduction

Echo - A Full Stack RAG Chatbot

The Idea

Echo is a full stack RAG chatbot that enables you to retrieve information faster and ask questions about your documents with the power of AI. Built using Next.js and LlamaIndex, this project uses the Meta Llama 3 model as the underlying LLM via the Hugging Face Inference API (rate limits apply). To get started, users can upload their documents to Echo and have a question-answer based conversation with the chatbot.

The Choice of Tech Stack

At the time of this project, I wanted to build something without incurring any costs. Hence, my choice of tech stack involved choosing technologies and services that were either free or had a generous free tier. This project is built on the Next.js framework and uses LlamaIndex for RAG, Supabase for authentication and file storage, and Pinecone for storing vector embeddings.

For the LLM, I chose Meta’s Llama 3 model via the Hugging Face Inference API. If you don’t want to use the Inference API, you can run the models locally through Ollama as LlamaIndex has support for that as well. But I did not want to run the models locally due to CPU and memory constraints + I wanted a fully functional production ready application that I could deploy to the web. So I preferred something that was behind an API and I could just call it in my application.

Application Flow

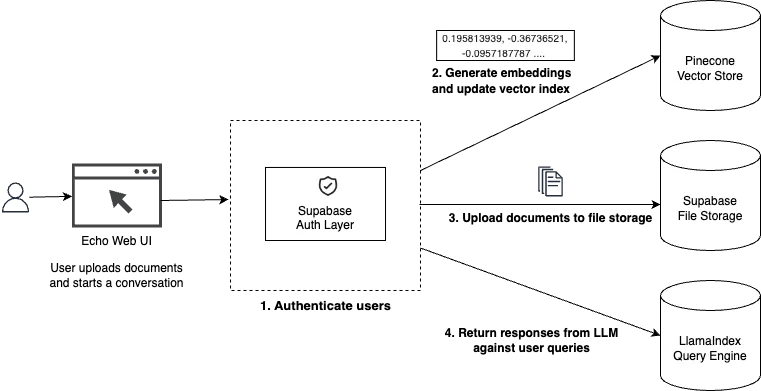

Following is a basic application flow of Echo.

1. Authenticate User

To authenticate the users, I am using Supabase Auth’s anonymous sign-in feature. Anonymous sign-ins allow you to build apps which provide users an authenticated experience without requiring users to enter an email address, password, use an OAuth provider or provide any other PII (Personally Identifiable Information). This is super handy in my case because I didn’t want to create permanent users and collect any personally identifiable information. When a user opens up the main page, the application signs in the user anonymously by storing a token in the browser’s cookie. For every subsequent user action/API call, the auth layer will validate the user’s access token JWT on the server.

2. Generate Embeddings and Update Vector Index

The next step in the process is to parse the uploaded documents and create vector embeddings of the data. This step is the most essential because in order to query the LLM in a context-aware way we must provide it the context in a form it would understand. This almost always means creating vector embeddings — numerical representations of the data. LlamaIndex does this all under the hood with its VectorStoreIndex module. The embeddings are persisted to Pinecone which is a vector database.

3. Upload Documents to File Storage

I also store the user’s originally uploaded documents to the cloud so that they could easily refer to the documents while having a conversation with echo in a split view. The documents are uploaded to a Supabase’s storage bucket. One of the most useful feature of Supabase is row level security. RLS allows you to create Security Access Policies to restrict access based on your business needs. You can configure it to only allow authenticated users to perform operations (select, insert, update, delete) on the storage objects that they have access to.

4. Return Response from LLM Against User Queries

Getting a query response from the LLM through LlamaIndex is literally just two lines of code:

const queryEngine = index.asQueryEngine();

const response = await queryEngine.query({ query: "query string" });Query engine is a generic interface that allows you to ask question over your data. A query engine takes in a natural language query, and returns a rich response. LlamaIndex also provides support for a ChatEngine which allows you to have a conversation with your data (multiple back-and-forth instead of a single question & answer). I was not able to get that working therefore I setup a simple proof of concept with the Query Engine.

Demo

Known Issues

There are couple of known issues that I plan to fix in future versions:

- Parsing takes too long on PDFs with large number of pages.

- LLM response truncates if it’s too long.

- Need to use Chat Engine from LlamaIndex instead of Query Engine.

Resources

For more information, take a look at the following resources:

- Next.js Documentation - learn about Next.js features and API.

- LlamaIndex Documentation

- Supabase Documentation

- Pinecone Documentation

Parting Thoughts

I want to take a moment and talk about the intention of this project. My goal with this project was to get my hands dirty with something new. Also whenever I searched online for demos and tutorials on RAG, I always came across Python notebooks explaining the process but not many full stack applications. When we are making a full stack application, complete with a user interface and backend API, there are multiple things to put together. You have to make your data interoperable. You have to think of constraining your inputs or your application logic goes haywire. You have to think from a product perspective as well. So this project has taught me a lot in that aspect. Limitations and issues will always be there. A product is never complete. We keep iterating and improving upon it. Tech keeps changing and we keep updating. It’s a process of continuous improvement.

In short, it’s the journey, not the destination. 😊